Recent advancements in artificial intelligence have seen Large Language Models (LLMs) pass the famous Turing Test in conversational settings, marking a milestone in AI development. This achievement, demonstrated in some recent empirical studies1, illustrates just how closely AI-driven dialogue systems have begun to mimic genuine human interactions. This should also serve as a reminder that progress will not end here but will continue to extend into other applications of language technologies.

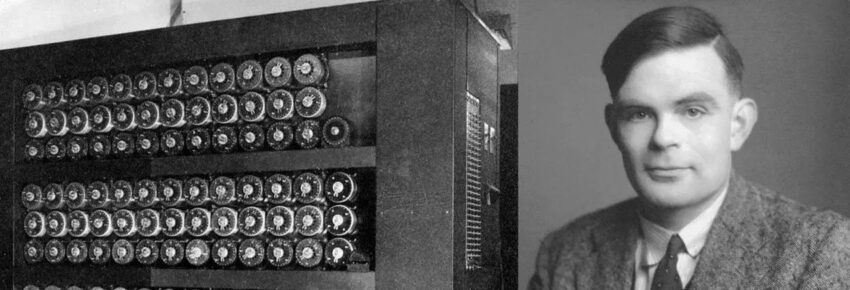

Seventy-five years ago, Alan Turing (1950) proposed the “imitation game” as a means of assessing whether machines could genuinely exhibit intelligence. In this game, now commonly known as the Turing Test, a human interrogator engages simultaneously with two unseen participants (one human and one machine) through a text-based interface. Both participants try to convince the interrogator that they are the real human. If the interrogator cannot consistently distinguish between the human and the machine, the machine is considered to have passed the test, demonstrating its capacity to imitate human-like intelligence. In other words, the Turing Test is a simple way to determine whether a machine can produce responses indistinguishable from those of a human during conversation.

Recently, AI-driven speech translation technologies have experienced dramatic improvements, achieving unprecedented accuracy and fluency. As this rapid advancement continues, I believe the time has come to design a specific Turing Test for Speech Translation. This specialized Turing Test would serve as the ultimate benchmark for assessing whether AI translation agents have reached parity with human interpreters in real-time scenarios. Let’s be clear: as of now, any system would fail, often quite dramatically. However, this is bound to change in the near future, and achieving comparable quality might happen sooner than we expect. What we’re missing is a robust, high-stakes equivalent of the Turing Test for speech translation — a way to systematically evaluate whether a translated message, specifically in speech, is functionally indistinguishable from what a professional human interpreter would deliver in the same context.

Why specifically propose a Turing Test for speech translation? First, there is an important philosophical dimension. Successfully creating an AI agent capable of translating speech in real-time, as effectively and accurately as a human interpreter, represents more than just a technological achievement; it would mark a profound milestone in our understanding of both human cognition and AI capabilities (in the past, cognitive scientists have already studied simultaneous interpreting to gain deeper insights into the workings of the human brain2). Passing this test would thus be remarkable and fascinating. It would indicate that we have developed technology sophisticated enough to master one of the most cognitively demanding human tasks: the immediate and precise comprehension and translation of nuanced language.

However, a Turing Test for speech translation isn’t only about philosophy; it carries significant practical implications. As AI-powered speech translation will become more prevalent across sectors, the need for robust evaluation frameworks becomes paramount, especially in regulated markets such as healthcare and legal services. Evaluation ensures reliability, quality, and trustworthiness of these systems. A Turing Test for speech translation could encourage the development of more specialized and pragmatic evaluation methods, i.e. the ones to be used in real-life contexts. Currently, we lack comprehensive and reliable approaches specifically designed to assess real-time speech translation in authentic communicative scenarios. By this, I do not mean standardized metrics such as BLEU, METEOR, or COMET, or improved approaches, for example as proposed by Chen et al.3; these scores alone do not fully capture the real-world usability of translation systems, nor do they indicate whether a system meets practical quality standards. While obviously useful for researchers and developers, such metrics have limited applicability in real-life contexts. Some efforts has been undertaken in the past, for example in our paper where we compared human and AI interpreting4, or where we attempted to find correlations between human and machine evaluations of interpreting performances5. But these are just preliminary studies, with little to no application in real-life scenarios.

Importantly, robust evaluation mechanisms will pave the way for necessary certifications, fostering greater confidence among stakeholders and regulatory bodies. Certifiable evaluations can provide a form of assurance, though absolute certainty in language-related tasks is elusive, that AI speech translation solutions meet stringent performance criteria. Certifications will become a necessity in regulated markets, as they already are for human interpreters..

One clarification is necessary to avoid misunderstandings: the Turing Test for Speech Translation is not intended as a method for determining whether a speech translation system is suitable for a particular real-world scenario. The reason is straightforward: achieving indistinguishability between a human and a machine does not necessarily imply that the translation quality is adequate for its intended purpose. In fact, translation quality could still fall short of practical requirements even when human-machine parity is achieved. Conversely, complete parity with human performance in the imitation game may not be necessary for a speech translation system to be practically effective, provided that other criteria relevant to the specific context of use are satisfied.

What a “Speech Translation Turing Test” Might Look Like

In a nutshell, imagine a blind study where:

- Human listeners judge live or recorded translations.

- The source language is spoken naturally.

- They have to determine: was this translated by a human interpreter or by an AI system?

If, for five minutes or more, they can’t tell the difference, then we might consider that a pass. Three considerations:

a) A preliminary distinction to make is between speech-to-text and speech-to-speech translation systems. The latter presents significantly greater challenges in passing the Turing Test due to the inherent complexities of spoken language, such as intonation, rhythm, and spontaneous speech patterns, which are notably more difficult for machines to replicate convincingly than written language. The ultimate Turing Test for speech translation would be the one that applies to speech-to-speech, but we should probably start with the speech-to-text scenarios.

b) Machines might outperform humans in some ways (speed of delivery, precision, etc.), making it possible to distinguish the performance from humans not in virtue of bad performances but of superhuman performances. This is an issue also known in the Turing Test.

c) How should judges decide? This is a tricky part, given that the evaluation includes a multilingual component and requires clear shortcuts. The highest degree of reliability would involve having experts—practically speaking, other interpreters—make the decision. However, since interpreters might be biased, a better alternative would be to involve expert bilinguals in a double evaluation process: first, evaluating only the target translation within a clearly defined use case (potentially adding questions to assess downstream task success, e.g., “Can the judge make the correct decision based solely on the translation?”); and second, comparing the target translation directly against the original.

Conclusions

In conclusion, recent advancements in artificial intelligence, particularly in Large Language Models, have brought us closer than ever to passing the Turing Test, demonstrating remarkable potential in AI-driven conversational and translation systems. However, we still lack a specialized Turing Test explicitly tailored for speech translation, one of the most demanding language activities performed by humans. Designing and implementing such an evaluation would be interesting not only from a philosophical perspective, offering deeper insights into human cognition and AI capabilities, but also from a practical standpoint, as it could stimulate the development of robust evaluation methodologies, thereby ensuring reliability and trustworthiness in real-world applications.

Bibliography

- Cameron R. Jones, Benjamin K. Bergen, 2025. Large Language Models Pass the Turing Test. ↩︎

- See for example Fereira et al. 2020: https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2020.548755/full ↩︎

- Chen, M., Duquenne, P.-A., Andrews, P., Kao, J., Mourachko, A., Schwenk, H., Costa-jussà, M.R., 2022. BLASER: A Text-Free Speech-to-Speech Translation Evaluation Metric. https://doi.org/10.48550/arXiv.2212.08486 ↩︎

- Fantinuoli C., Prandi B. “Towards the evaluation of automatic simultaneous speech translation from a communicative perspective“. Proceedings of IWSLT 2021 (2021) ↩︎

- Xiaoman W., Fantinuoli C. “Exploring the Correlation between Human and Machine Evaluation of Simultaneous Speech Translation“. In Proceedings of the 25th Annual Conference of the European Association for Machine Translation (Volume 1), pages 325–334 (2024). ↩︎

1 thought on “The Turing Test for Speech Translation”